A robots.txt file tells search engines spiders what pages or files they should or shouldn't request from your site. It is more of a way of preventing your site from being overloaded by requests rather than a secure mechanism to prevent access. It really shouldn't be used as a way of preventing access to your site, and the chances are that some search engine spiders will access the site anyway. If you do need to prevent access then think about using noindex directives within the page itself, or even password protecting the page.

The syntax of a robots.txt file is pretty simple. Each part must be proceeded with what user agent it pertains to, with the wildcard of * being used to apply to all user agents.

User-agent: *

To allow search engines to spider a page use the Allow rule. For example, to allow access to all spiders to the entire site.

User-agent: *

Allow: /

To disallow search engine spiders from a page use the Disallow rule. For example, to disallow access for all spiders to a single file.

User-agent: *

Disallow: /somefile.html

You can then apply rules to a single search engine spider by naming the spider directly.

User-agent: Googlebot

Allow: /

Disallow: /some-private-directory/

It's also considered good practice from a search engine optimisation point of view to add a reference to your sitemap.xml file in your robots.txt file. This reference looks like the following.

Sitemap: https://www.example.com/sitemap.xml

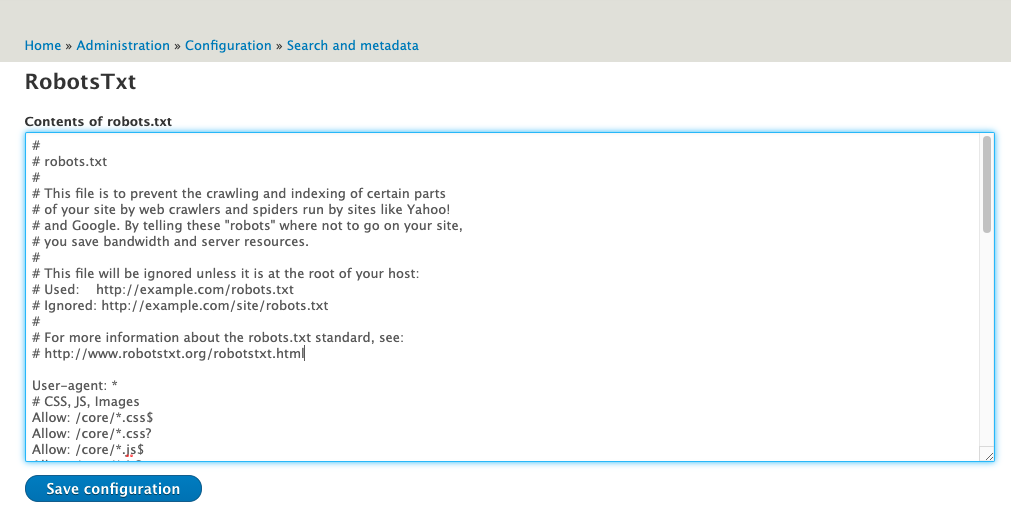

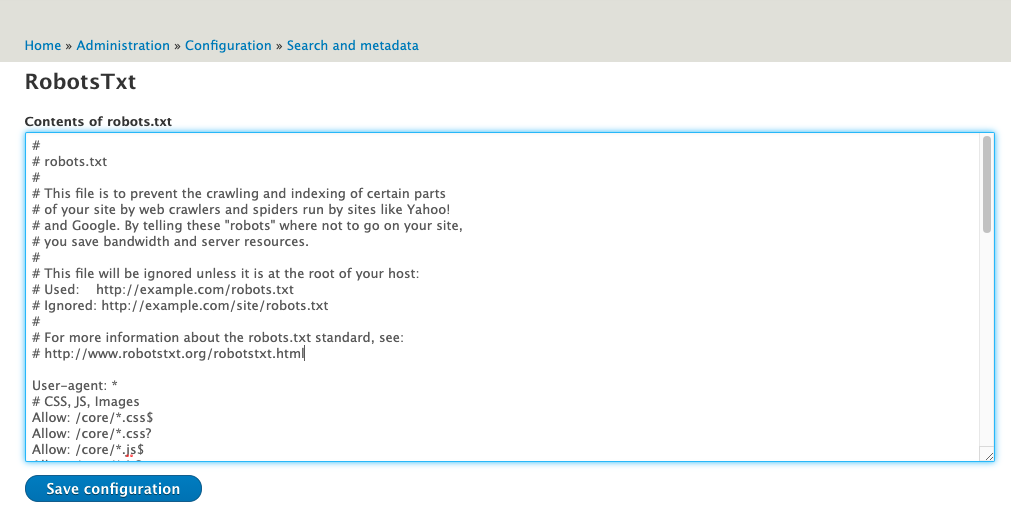

Drupal comes with a pretty complete robots.txt file. There are a few rules to allow access to assets from the core and profiles directories and rules to disallow access to things like the login and password reset pages. As you add more pages and sections to your site you will probably want to modify your robots.txt file to control how the site is spidered.

Rather than code all of these customisations into the file itself, an alternative approach is to use the RobotsTxt module to allow administrative access to the robots.txt file. This will allow your site administrators to update the robots.txt themselves without having to get developer help.

The administration form of the RobotsTxt file module is pretty simple and consists of a single text area. You just enter your robots.txt file content and git save.

This module can't do anything with the default Drupal robots.txt file in place on the site, so this needs to be removed for the module to work correctly. Deleting the file from your repo is fine, but there is a very good chance that either an update to Drupal or your deployment systems will add this file back in. This is especially the case if you are using the Drupal scaffold file as this has a provision for adding in default files like this.

For this reason you'll want to automate the removal of this file, and the best place to do this is using events in your composer.json file. The RobotsTxt module has some information in the readme file that shows how to do this. All that is needed is to add the following to your composer.json file.

"scripts": {

"post-install-cmd": [

"test -e web/robots.txt && rm web/robots.txt || echo robots already deleted"

],

"post-update-cmd": [

"test -e web/robots.txt && rm web/robots.txt || echo robots already deleted"

]

},

This will delete the robots.txt file from your 'web' directory every time you run composer install or update. You'll need to rename this depending on your project setup.

Although this works, I prefer a slightly different approach using a PHP class to automatically find the Drupal web directory and delete the robots.txt file. This needs an additional setting in the 'autoload' section of the composer.json file to point towards the PHP class.

"autoload": {

"classmap": [

"scripts/composer/RobotsTxtHandler.php"

]

},

"scripts": {

"post-install-cmd": [

"DrupalProject\\composer\\RobotsTxtHandler::deleteRobotsTxt"

],

"post-update-cmd": [

"DrupalProject\\composer\\RobotsTxtHandler::deleteRobotsTxt"

]

},

All that's needed now is to add the following to a file called scripts/composer/RobotsTxtHandler.php. This contains a single class with a static method called deleteRobotsTxt().

<?php

namespace DrupalProject\composer;

use Composer\Script\Event;

use DrupalFinder\DrupalFinder;

use Symfony\Component\Filesystem\Filesystem;

use Webmozart\PathUtil\Path;

class RobotsTxtHandler {

/**

* Delete the robots.txt file from the Drupal web root directory.

*

* @param Event $event

*/

public static function deleteRobotsTxt(Event $event) {

if (!class_exists('DrupalFinder\DrupalFinder')) {

// DrupalFinder library not available don't do anything.

return;

}

$fs = new Filesystem();

$drupalFinder = new DrupalFinder();

$drupalFinder->locateRoot(getcwd());

$drupalRoot = $drupalFinder->getDrupalRoot();

$robotsTxtFile = $drupalRoot . '/robots.txt';

if ($fs->exists($robotsTxtFile)) {

$fs->remove($robotsTxtFile);

$event->getIO()->write("Deleted the default robots.txt file from the Drupal root.");

}

else {

$event->getIO()->write("robots.txt removal: Nothing to delete.");

}

}

}

With all this in place make sure that you run composer install to update composer so it knows about the new class and scripts.

This method works by finding out where you Drupal site is installed and then deleting the robots.txt file if it exists. The benefit of using this file is that it will automatically find the correct directory that contains your Drupal install rather than having this hard coded into the composer file. I have used it successfully on a couple of projects without any problems.

When you run composer install or composer update you will see the following in your output.

Deleted the default robots.txt file from the Drupal root.

If you are using Drupal scaffold then you also have the option of excluding the robots.txt file as the Drupal scaffold process is being run. To do this you just need to add the following "file-mapping" item to the already existing "drupal-scaffold" section.

"drupal-scaffold": {

"locations": {

"web-root": "web/"

},

"file-mapping": {

"[web-root]/robots.txt": false

}

},

You need to delete the robots.txt file yourself, but once done the Drupal scaffold system will not add it back to your web directory.

The final piece in the puzzle here is regarding the configuration of the module. Whilst your site administrators will appreciate being able to update the robots.txt file you might also want to add the configuration for the module to your configuration ignore setup. Just add the config item robotstxt.settings to your configuration ignore settings and your site administrators will be able to edit the robots.txt file without their settings being overwritten when the configuration is imported.

Comments

Thanks for this article.

You wrote: All that's needed now is to add the following to a file called scripts/composer/RobotsTxtHandler.php.

But where this file must be added? In the same directory as which the vendor directory contains and the composer.json? Like this:

- scripts/composer/RobotsTxtHandler.php

- vendor

- composer.json

-composer.lock

Or in the vendor directory?

Are any modifications required in: vendor/composer/autoload_classmap.php?

Submitted by PROMES on Thu, 05/13/2021 - 14:40

PermalinkHi Pomes,

You were right the first time with your file example. The scripts directory just needs to live at the same level as the composer.json directory. You may need to run composer install in order for the classmap to be updated. I forgot to mention that in the article.

You don't need to add any further modifications to vendor/composer/autoload_classmap.php as composer will handle that file itself.

Submitted by philipnorton42 on Thu, 05/13/2021 - 14:43

PermalinkHi!

Maybe instead of writing custom composer script, we could configure Drupal's Composer Scaffold

Submitted by Mike on Thu, 05/13/2021 - 17:13

PermalinkHi philipnorton42 and Mike,

Thanks for your reply. I allready configured Drupal's Composer Scaffold, but when reading your article I got an idea to do some other file handling.

I just ran 'composer install' and then 'composer update' but my script doesn't run.

Maybe I inserted the autoload and scripts lines at the wrong level on composer.json. I inserted them in the 'extra' level. Is this correct?

Submitted by PROMES on Fri, 05/14/2021 - 11:21

PermalinkThanks all,

I indeed had to put the extra lines in composer.json one level up (first level).

Submitted by PROMES on Fri, 05/14/2021 - 11:23

PermalinkSorry,

I was too excited. After the output of composer I got a line: DrupalProject\composer\RobotsTxtHandler::deleteRobotsTxt, but no real output. Did I forget something?

Submitted by PROMES on Fri, 05/14/2021 - 11:43

PermalinkHi Promes,

I just re-tried everything in a new install of Drupal and it all worked fine. The script is just meant to delete your robots.txt file from the web directory. If you see the line you've seen above then the next line will be an indication of if the file was deleted or not.

I'll need to give Mike's suggestion a go and write that up as well as it looks good.

Submitted by philipnorton42 on Fri, 05/14/2021 - 23:56

PermalinkThe solution that Mike suggested is good, I have updated the article to include this. Thanks Mike!

Pomes, if you are looking for simplicity then that might be a better solution than the script.

Submitted by philipnorton42 on Sat, 05/15/2021 - 11:29

PermalinkAdd new comment